At camelcamelcamel we use CDK to deploy our infrastructure. We have a bunch of auto-scaling groups (ASG) behind Application Load Balancer (ALB). We recently started noticing an issue where a deployment that results in ASG instances being refreshed would cause a lot of 502 errors for a few minutes during the deployment. This surprised us as we already use health checks, rolling updates and signals as recommended. To get the bottom of it, we built a timeline of the events for one specific group:

- [22:50:07] CloudFormation began updating the auto-scaling group

- [22:51:37] One old instance is terminated

- [22:52:12] A new instance is being launched

- [22:54:14] The new instance signals that it’s ready

- [22:54:06] The new instance logs show successful health check from ALB

- [22:54:15] The second old instance is terminated

- [22:54:48] A second new instance is being launched

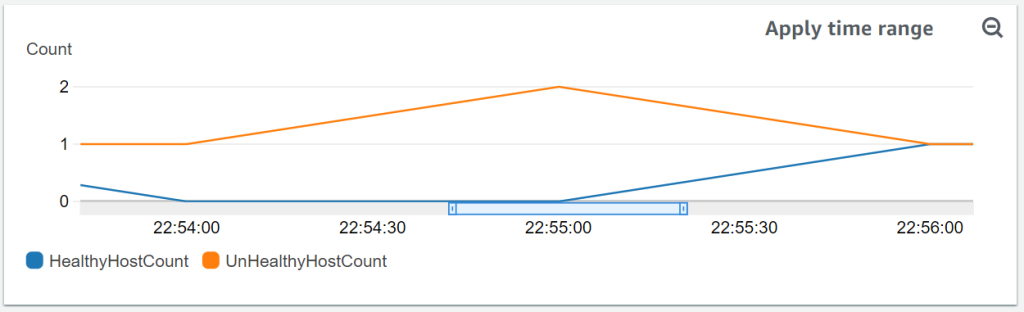

- [22:54:52] ALB logs start showing 502 errors trying to route requests to the second new instance

- [22:56:50] The second new instance signals that it’s ready

- [22:56:50] No more 502s

What struck us as odd was this only happened on the last instance of each group. ALB waited until the first instances of the group were ready before sending traffic their way. But for the last instance, ALB just started hammering it with traffic immediately after it was launched.

We ended up contacting AWS support to ask why the last instance of our rolling update gets requests before it’s ready. They pointed out that all of our instances were unhealthy at that time and therefore ALB turned fail-open. When all the instances are unhealthy, ALB starts sending all of them requests in the hopes that some can still handle them. The occasional handled request is better than no requests at all being handled.

But why was the first new instance unhealthy even though it was ready? ALB only treats instances as healthy after a given number of health checks pass. We were using the default CDK target group health check configuration which is 5 consecutive health checks at a 30 seconds interval. That means any instance takes at least 2:30 minutes to be considered healthy after it’s ready. That delay was long enough and our scaling group small enough for the last instance to start-up while all the other new instances are still considered unhealthy.

The root cause of it all seems to be auto-scaling group not waiting for ALB to consider the instance healthy before moving on with the scaling operation that removes the instances that are considered healthy. We expected the recommended CDK ALB/ASG configuration to deal with this, but apparently it doesn’t.

AWS suggested a few possible solutions including adding more instances to the group and using life-cycle hooks. Life-cycle hooks allow us to control when an auto-scaling group instance is considered launched. They will delay the scaling operation until we complete the hook ourselves. So we can complete the hook only after the instance is ready and everything is installed.

A popular use of lifecycle hooks is to control when instances are registered with Elastic Load Balancing. By adding a launch lifecycle hook to your Auto Scaling group, you can ensure that your bootstrap scripts have completed successfully and the applications on the instances are ready to accept traffic before they are registered to the load balancer at the end of the lifecycle hook.

https://docs.aws.amazon.com/autoscaling/ec2/userguide/lifecycle-hooks.html

To integrate life-cycle hooks with CDK we added a life-cycle hook to our ASG, a call to aws autoscaling complete-lifecycle-action at the end of our user-data script, and a policy to our role that allows completing the life-cycle action.

Most of the code deals with preventing circular dependencies in CloudFormation. The call itself is a one-liner, but we need to get the ASG name and hook name without depending on the ASG. User-data script goes into the launch configuration and the ASG depends on it. So the user-data script can’t in turn depend on the ASG again.

import aws_cdk as core

import aws_cdk.aws_autoscaling as autoscaling

import aws_cdk.aws_iam as iam

class MyAsgStack(core.Stack):

def __init__(self, scope: Construct, id_: str, **kwargs) -> None:

super().__init__(scope, id_, **kwargs)

asg = autoscaling.AutoScalingGroup(

self, f"ASG",

# ...

)

# the hook has to be named to avoid circular dependency between the launch config, asg and the hook

hook_name = "my-lifecycle-hook"

# don't let instances be added to ALB before they're fully installed.

# we had cases where the instance would be installed but still unhealthy because it didn't pass enough

# health checks. if all the instances were like this at once, ALB would turn fail-open and send requests

# to the last instance of the bunch that was still installing our code.

# https://console.aws.amazon.com/support/home?#/case/?displayId=9677283431&language=en

# https://docs.aws.amazon.com/autoscaling/ec2/userguide/lifecycle-hooks.html

asg.add_lifecycle_hook(

"LifeCycle Hook",

lifecycle_transition=autoscaling.LifecycleTransition.INSTANCE_LAUNCHING,

default_result=autoscaling.DefaultResult.ABANDON,

lifecycle_hook_name=hook_name,

)

asg.add_user_data(

# get ASG name (we could use core.PhysicalName.GENERATE_IF_NEEDED again, but the ASG already exists)

f"ASG_NAME=`aws cloudformation describe-stack-resource --region {self.region} "

f"--stack {core.Aws.STACK_NAME} --logical-resource-id {self.get_logical_id(asg.node.default_child)} "

f"--query StackResourceDetail.PhysicalResourceId --output text`",

# get instance id

"INSTANCE_ID=`ec2-metadata | grep instance-id | head -n 1 | cut -d ' ' -f 2`",

# complete life-cycle event

f"aws autoscaling complete-lifecycle-action --lifecycle-action-result CONTINUE "

f"--instance-id \"$INSTANCE_ID\" "

f"--lifecycle-hook-name '{hook_name}' "

f"--auto-scaling-group-name \"$ASG_NAME\" "

f"--region {core.Aws.REGION}"

)

# let instance complete the life-cycle action

# we don't need cloudformation:DescribeStackResource as CDK already adds that one automatically for the signals

iam.Policy(

self,

"Life-cycle Hook Policy",

statements=[

iam.PolicyStatement(

actions=["autoscaling:CompleteLifecycleAction"],

resources=[asg.auto_scaling_group_arn],

)

],

roles=[asg.role],

)

After deploying the code with life-cycle hooks, our new instances won’t even register with the ALB until they’re ready. The health check delay issue still exists so we can still get into fail-open. But at least the new instances that are definitely not ready won’t be sent any requests and the user won’t be getting 502 errors.

It turns out CodeDeploy uses life-cycle hooks. Using CodeDeploy to deploy the code without recreating the instance is another solution for this issue.